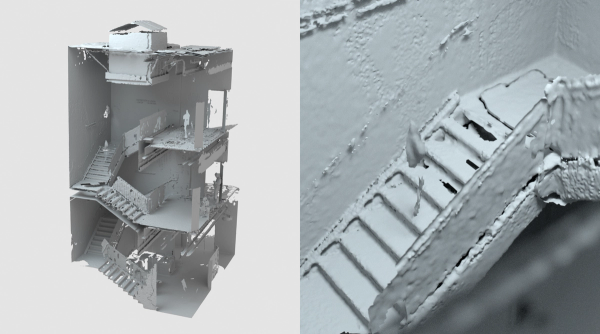

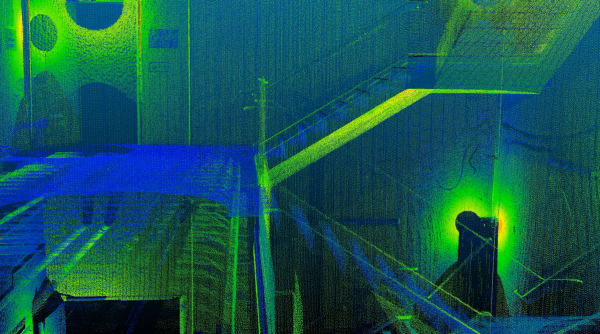

LIDAR Scan

2019

We use the same technology that prevents self-driving cars from slamming into canyon walls painted to look like a tunnel in order to map physical environments for a more accurate AR experience. We love this. Coyotes (carnivorous vulgaris) of the obsessive, sociopathic variety do not.

As impressive as mobile SLAM technology is, even the best versions of it model the world as though it were quickly sculpted in wax and left out on a summer day. And that won’t cut the mustard.

LIDAR scanning lets us capture environments with enough detail that we can animate a ball bouncing down the stairs and trust that the user will see the ball hit the exact step we intend.

We imported and calibrated the data in Autodesk Recap, a tool used in the Architectural and Engineering industries. Then we optimized the dataset in the open source software Cloud Compare and exported it as a .PLY. Our CT team wrote a python script to import the data into Cinema 4-D, and rebuilt the environment as a low-polygon model that was used as a template for our animators. Next, we wrote a series of custom animation processing and export tools to allow for hundreds of dynamic balls and animations.

Advertisements have been promising experiences like this as the future of mobile AR for years. It was exciting to see it running on consumer phones, and to know that we’re a significant step closer to the delight of world-aware AR being available to everyone. Even coyotes.