Moshing

2019

A project for Apple Music Korea through MAL for their Memoji campaign. The aesthetics were based on glitch graphics and visuals so we built tools to create an ownable effect specifically for this spot.

Our team worked to create a couple of unique effects to be peppered throughout the Memoji ad. Rather than using an off-the-shelf solution for datamoshing / glitchy graphics, we wrote custom software using OpenFrameworks and bespoke GLSL Shaders.

The first ask was for a riff on the well-known technique of datamoshing. We needed to make a glitching distortion that felt unique and visually distinguishable from a typical broadcast glitch. The second ask was to match a storyboard frame with an explosion of liquid color emanating outwards from the Memoji head. Both styles relied on relatively similar techniques.

Raw output from the datamosh effect. This was before grading and compositing by the animators.

For the datamosh look, we calculate the direction of movement in the source footage (optical flow), and use that to displace pixels. This is done in a feedback loop, so the act of displacing pixels in a direction causes the optical flow to pick up and amplify any motions from the source, giving way to the glorpy textures that we ended up with. While everything is mixing around, we drip in new pixel colors to keep the simulation from diffusing to a solid color. Of course, with the datamosh we also try to dial in some blocky structure so that the distortions are more angular.

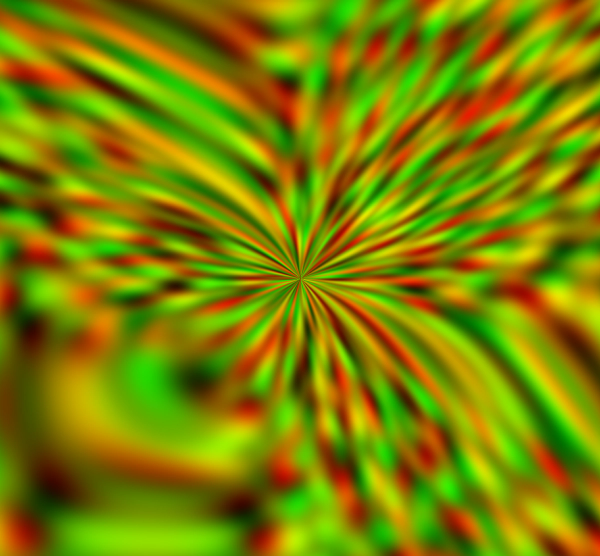

Raw color explosions + moshing outputs

The liquid explosion effect was made in a similar way. Multiple shaders were daisy-chained together in a feedback loop. The general idea is to drizzle color into the center and use curl noise to displace pixels towards the edge of the frame. The displacement is relatively small, but accumulates over time by feeding the output color back in. The last output gets displaced and the cycle begins anew.

An example of the noise we used. Red and green channels use different noise offsets.

We were able to leverage OpenFrameworks to quickly build these custom effects that would not have been possible in other environments. Additionally, because the effects were running in real time, we were able to quickly hone in on what we liked and see the project in motion without having to wait around for a render to finish. In the future, we’d love to explore translating some of these same techniques into an explorable 3-D environment for AR camera filters.